It is now very easy to use coherence with ADF BC, you don't need to program java or know much about coherence. There are only five steps to make it work.

Step 1, create an EJB entity

Step 2, change the coherence configuration xml where we will add the new entity and start Coherence on 1 or more servers

Step 3, Create an ADF BC entity ( same attributes and java types as the EJB entity ) and override the row entity class.

Step 4, Create on the just created entity a new default view and override the viewobject object.

Step 5, Fill the cache and start the ADF BC Web Application

That's all.

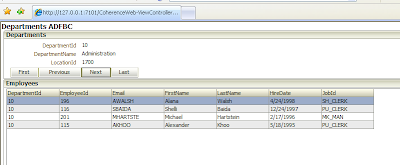

Here is an picture of an ADF page where I use the coherence viewobjects and I also support ADF BC master detail relation ( viewlinks)

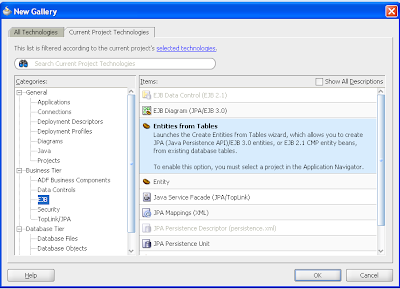

We start by adding a new EJB entity.

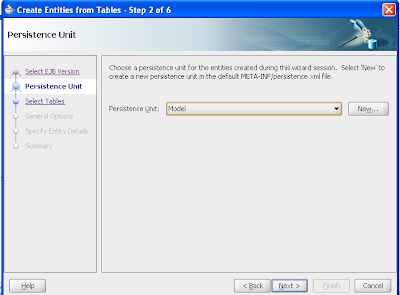

We start by adding a new EJB entity. Create a new persistence unit, This name must match with the coherence configuration xml

Create a new persistence unit, This name must match with the coherence configuration xml Select only one table a time, else the foreign key attributes will be replaced by relation classes

Select only one table a time, else the foreign key attributes will be replaced by relation classes We need to change the persistence xml and add some extra jdbc properties. Coherence won't use the datasource so we need to add eclipselink.jdbc properties. Change my values with your own database values.

We need to change the persistence xml and add some extra jdbc properties. Coherence won't use the datasource so we need to add eclipselink.jdbc properties. Change my values with your own database values.

<?xml version="1.0" encoding="windows-1252" ?>

<persistence xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/persistence http://java.sun.com/xml/ns/persistence/persistence_1_0.xsd"

version="1.0" xmlns="http://java.sun.com/xml/ns/persistence">

<persistence-unit name="Model">

<provider>org.eclipse.persistence.jpa.PersistenceProvider</provider>

<jta-data-source>java:/app/jdbc/jdbc/hrDS</jta-data-source>

<class>nl.whitehorses.coherence.model.Departments</class>

<class>nl.whitehorses.coherence.model.Employees</class>

<properties>

<property name="eclipselink.target-server" value="WebLogic_10"/>

<property name="javax.persistence.jtaDataSource"

value="java:/app/jdbc/jdbc/hrDS"/>

<property name="eclipselink.jdbc.driver"

value="oracle.jdbc.OracleDriver"/>

<property name="eclipselink.jdbc.url"

value="jdbc:oracle:thin:@localhost:1521:ORCL"/>

<property name="eclipselink.jdbc.user" value="hr"/>

<property name="eclipselink.jdbc.password" value="hr"/>

</properties>

</persistence-unit>

</persistence>

Download coherence from Oracle and put my start script and my coherence configuration file in the bin folder of coherence home

here is my configuration xml, Where I add for every ejb entity a new cache-mapping. If I add the ejb entities under the same package name and use the same persistence unit I can use the same distributed-scheme.

<?xml version="1.0" encoding="windows-1252" ?>

<cache-config>

<caching-scheme-mapping>

<cache-mapping>

<cache-name>Employees</cache-name>

<scheme-name>jpa-distributed</scheme-name>

</cache-mapping>

<cache-mapping>

<cache-name>Departments</cache-name>

<scheme-name>jpa-distributed</scheme-name>

</cache-mapping>

</caching-scheme-mapping>

<caching-schemes>

<distributed-scheme>

<scheme-name>jpa-distributed</scheme-name>

<service-name>JpaDistributedCache</service-name>

<backing-map-scheme>

<read-write-backing-map-scheme>

<internal-cache-scheme>

<local-scheme/>

</internal-cache-scheme>

<cachestore-scheme>

<class-scheme>

<class-name>com.tangosol.coherence.jpa.JpaCacheStore</class-name>

<init-params>

<init-param>

<param-type>java.lang.String</param-type>

<param-value>{cache-name}</param-value>

</init-param>

<init-param>

<param-type>java.lang.String</param-type>

<param-value>nl.whitehorses.coherence.model.{cache-name}</param-value>

</init-param>

<init-param>

<param-type>java.lang.String</param-type>

<param-value>Model</param-value>

</init-param>

</init-params>

</class-scheme>

</cachestore-scheme>

</read-write-backing-map-scheme>

</backing-map-scheme>

<autostart>true</autostart>

</distributed-scheme>

</caching-schemes>

</cache-config>

Add these parameters to run options of the model and viewcontroller project.

-Dtangosol.coherence.distributed.localstorage=false -Dtangosol.coherence.log.level=3 -Dtangosol.coherence.cacheconfig=d:\oracle\coherence\bin\jpa-cache-config-web.xml

Now JDeveloper knows where to find the coherence the cache.

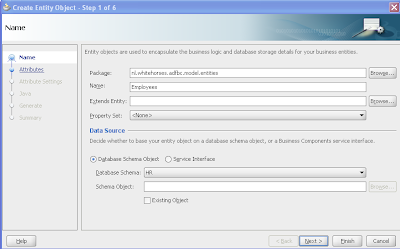

Create an new entity with the same name as the ejb entity and don't select a schema object. We will add our own attributes to this ADF BC entity.

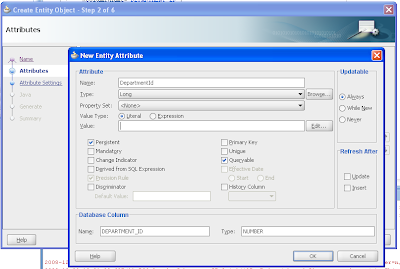

Add at least all the mandatory attributes to this entity. These attributes needs to have the same name and java type as the ejb entity.

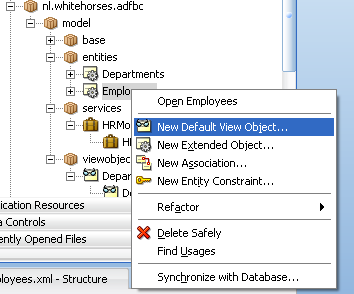

Add at least all the mandatory attributes to this entity. These attributes needs to have the same name and java type as the ejb entity. When we are finished we can create a new default view

When we are finished we can create a new default view

I created a new EntityImpl ( Inspired by the great work of Steve and Clemens). This EntityImpl has it's own doDML. In this method I use reflection to dynamically update or add entries to the Coherence Cache.

I created a new EntityImpl ( Inspired by the great work of Steve and Clemens). This EntityImpl has it's own doDML. In this method I use reflection to dynamically update or add entries to the Coherence Cache.

package nl.whitehorses.adfbc.model.base;

import com.tangosol.net.CacheFactory;

import com.tangosol.net.NamedCache;

import java.lang.reflect.Method;

import oracle.jbo.AttributeDef;

import oracle.jbo.server.EntityImpl;

import oracle.jbo.server.TransactionEvent;

public class CoherenceEntityImpl extends EntityImpl {

protected void doSelect(boolean lock) {

}

protected void doDML(int operation, TransactionEvent e) {

NamedCache cache =

CacheFactory.getCache(this.getEntityDef().getName());

if (operation == DML_INSERT || operation == DML_UPDATE) {

try {

Class clazz =

Class.forName("nl.whitehorses.coherence.model." + this.getEntityDef().getName());

Object clazzInst = clazz.newInstance();

AttributeDef[] allDefs =

this.getEntityDef().getAttributeDefs();

for (int iAtts = 0; iAtts < allDefs.length; iAtts++) {

AttributeDef single = allDefs[iAtts];

Method m =

clazz.getMethod("set" + single.getName(), new Class[] { single.getJavaType() });

m.invoke(clazzInst,

new Object[] { getAttribute(single.getName()) });

}

cache.put(getAttribute(0), clazzInst);

} catch (Exception ee) {

ee.printStackTrace();

}

} else if (operation == DML_DELETE) {

cache.remove(getAttribute(0));

}

}

}

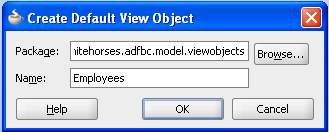

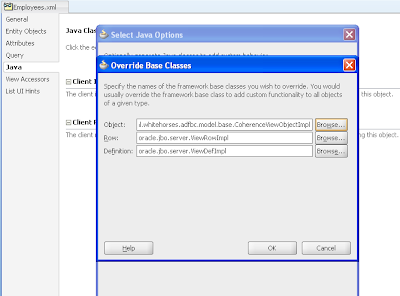

Override the just create ADF BC Entity with this EntityImpl

Here is the ViewObjectImpl I use to override the Viewobjects

package nl.whitehorses.adfbc.model.base;

import com.tangosol.net.CacheFactory;

import com.tangosol.net.NamedCache;

import com.tangosol.util.ConverterCollections;

import com.tangosol.util.Filter;

import com.tangosol.util.filter.AllFilter;

import com.tangosol.util.filter.EqualsFilter;

import com.tangosol.util.filter.IsNotNullFilter;

import java.lang.reflect.Method;

import java.sql.ResultSet;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import java.util.Set;

import oracle.jbo.AttributeDef;

import oracle.jbo.Row;

import oracle.jbo.ViewCriteria;

import oracle.jbo.common.Diagnostic;

import oracle.jbo.server.AttributeDefImpl;

import oracle.jbo.server.ViewObjectImpl;

import oracle.jbo.server.ViewRowImpl;

import oracle.jbo.server.ViewRowSetImpl;

public class CoherenceViewObjectImpl extends ViewObjectImpl {

private NamedCache cache;

private Iterator foundRowsIterator;

/**

* executeQueryForCollection - overridden for custom java data source support.

*/

protected void executeQueryForCollection(Object qc, Object[] params,

int noUserParams) {

cache = CacheFactory.getCache(this.getViewDef().getName());

List filterList = new ArrayList();

Set foundRows = null;

// get the currently set view criteria

ViewCriteria vc = getViewCriteria();

if (vc != null) {

Row vcr = vc.first();

// get all attributes and check which ones are filled

for (AttributeDef attr : getAttributeDefs()) {

Object s = vcr.getAttribute(attr.getName());

if (s != null && s != "") {

// construct an EqualsFilter

EqualsFilter filter =

new EqualsFilter("get" + attr.getName(), s);

// add it to the list

filterList.add(filter);

}

}

} else if (params != null && params.length > 0) {

for ( int i = 0 ; i < params.length ; i++ ) {

Object[] s = (Object[])params[i];

// construct an EqualsFilter

String attribute = s[0].toString();

attribute = attribute.substring(5);

EqualsFilter filter = new EqualsFilter("get" + attribute, s[1]);

// add it to the list

filterList.add(filter);

}

}

if (filterList.size() > 0) {

// create the final filter set, with

// enclosing the single filters wit an AllFilter

Filter finalEqualsFilterArray[] =

(EqualsFilter[])filterList.toArray(new EqualsFilter[] { });

AllFilter filter = new AllFilter(finalEqualsFilterArray);

foundRows = cache.entrySet(filter);

} else {

IsNotNullFilter filter = new IsNotNullFilter("get"+this.getAttributeDef(0).getName());

foundRows = cache.entrySet(filter);

}

setUserDataForCollection(qc, foundRows);

ConverterCollections.ConverterEntrySet resultEntrySet =

(ConverterCollections.ConverterEntrySet)getUserDataForCollection(qc);

foundRowsIterator = resultEntrySet.iterator();

super.executeQueryForCollection(qc, params, noUserParams);

}

protected boolean hasNextForCollection(Object qc) {

boolean retVal = foundRowsIterator.hasNext();

if (retVal == false) {

setFetchCompleteForCollection(qc, true);

}

return retVal;

}

/**

* createRowFromResultSet - overridden for custom java data source support.

*/

protected ViewRowImpl createRowFromResultSet(Object qc,

ResultSet resultSet) {

ViewRowImpl r = createNewRowForCollection(qc);

AttributeDefImpl[] allDefs = (AttributeDefImpl[])getAttributeDefs();

Map.Entry entry = (Map.Entry)foundRowsIterator.next();

// loop through all attrs and fill them from the righ entity usage

Object dynamicPojoFromCache = entry.getValue();

Class dynamicPojoClass = dynamicPojoFromCache.getClass();

for (int iAtts = 0; iAtts < allDefs.length; iAtts++) {

AttributeDefImpl single = allDefs[iAtts];

try {

Method m =

dynamicPojoClass.getMethod("get" + single.getName(), new Class[] { });

Object result = result = m.invoke(dynamicPojoFromCache, null);

// populate the attributes

super.populateAttributeForRow(r, iAtts, result);

} catch (Exception e) {

e.printStackTrace();

}

}

return r;

}

public long getQueryHitCount(ViewRowSetImpl viewRowSet) {

if (viewRowSet.isFetchComplete()) {

return viewRowSet.getFetchedRowCount();

}

Long result;

if ( cache != null) {

result = Long.valueOf(cache.size());

} else {

cache = CacheFactory.getCache(this.getViewDef().getName());

result = Long.valueOf(cache.size());

}

return result;

}

}

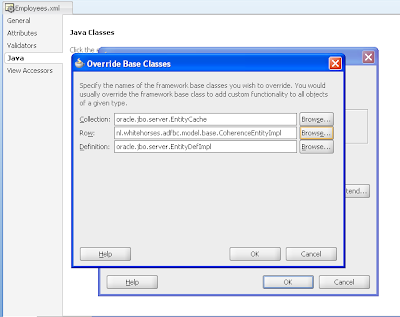

Override the viewobject with this ViewObjectImpl

That's all.

Here is the JDeveloper 11gR2 example on github

https://github.com/biemond/jdev11gR2_examples/tree/master/Coherence_ADF_BC

Important,

download coherence at otn and put the jars in the coherence\lib folder.

Rename the internal coherence folder of jdeveloper else you can't connect to the cache.

Update the project options with your own coherence settings, also the weblogic run options of the application.

And Start Coherence and fill the coherence cache before running.

Great post Edwin! Over the last few months I have been interested in how Coherence might assist with both the performance and scalability of large-scale ADF BC apps. The technique you and Clemens describe uses Coherence to persist the state of BC objects across the middle tier but I'm interested in what happens to AM instance passivation. Have you had any thoughts on that?

ReplyDeleteHi Simon,

ReplyDeletethat should work , you can make your own application impl and have an activate and passivate method which can store or retrieve from the chache

instead of using a table ps_txn you can now use coherence, the only thing is that the java object should be seriazable ( I think it is a dom object). If I have the time I ' ll will make a blog about it.

thanks edwin

Hi Simon.

ReplyDeleteI did some research you have to make your own CoherenceSerializer which extends Serializer.

and then override getAMSerializer in the applicationmoduleimpl.

Now the problem is that the the CoherenceSerializer has to be in the oracle.jbo.server package else I can't access the required method or variables.

I will look for a workaround

thanks Edwin

Hi Edwin

ReplyDeleteGood post I must say. What I dont understand is how come you say you dont have to know java when all code generated is in java and the overridden methods are in java. In that context I think you are misleading people who dont have a java background.

PS: I am a java person with over 7 years experience in java alone.

Regards

Elester

Hi Edwin

ReplyDeleteGreat Post.

I'm having an issue following your instructions. But I have got the table result empty. If I remove the override classes for the BC. It works (obviously without coherence) but once I override the classes I've got no data in the tables.

I see the classes being loaded in the Coherence log.

Is there some trick that I miss?

What about the persistence file. Because there are differences between what you have in here and what you have in the code sample.

org.eclipse.persistence.jpa.PersistenceProvider

nl.amis.jpa.model.Departments

nl.amis.jpa.model.Employees

Thanks and Regards

Paco

Hi,

Deletedid you do fill up the cache and rename the jdev coherence jars.

thanks

Here is the JDeveloper 11gR2 example on github

https://github.com/biemond/jdev11gR2_examples/tree/master/Coherence_ADF_BC

Important,

download coherence at otn and put the jars in the coherence\lib folder.

Rename the internal coherence folder of jdeveloper else you can't connect to the cache.

Update the project options with your own coherence settings, also the weblogic run options of the application.

And Start Coherence and fill the coherence cache before running.

Hi,

ReplyDeleteI am wondering if there is any way to completely eliminate the JDBC database table connection from the Application Module, as I see your Entity Object still needs to be backed by a table in the DB?

This is because my EO and VO are backed entirely by Coherence and I don't want to create any "stub" table in the DB for it.

Thanks in advance for your answer.

Clark,

Hi,

DeleteI think ADF BC or JPA always need a connection to a database , only when you call coherence from a managed bean or from an EJB.

and in the AM of ADF BC you always have a configuration profile.

but you can use custom VO just like the VO web service examples instead you do coherence. then you won't need EO.

Thanks